An important part of the mapping conducted in Phase 2 is to gain an overview of the performance, security, and cost-effectiveness of the AI system. This forms the basis for more specific assessments and planning in the next phase.

Performance of an AI system

The performance of an AI system is closely linked to how the model has been developed and the quality of the data it has been trained on. If the training data contains systematic bias, the AI model may produce unfair or unreliable results. AI models are often highly flexible and may memorise training data without being able to generalise to new, unseen data. It is therefore essential that the model’s performance is tested on independent data that has not been included in the training.

The manufacturer may have validated their solution to varying extents. There should be documentation available that explains how the technical validation was performed, which data sets were used and whether the AI model was tested on independent datasets. Under the MDR, manufacturers of CE-marked devices are required to document system performance. While there is no general requirement for clinical validation for AI models, such validation may be required for higher-risk medical devices (particularly class IIb and III). In these cases, clinical testing may be necessary [80]. MDCG's report provides guidance on the clinical evaluation of software as a medical device [81]. Article 61 of the MDR addresses clinical evaluation of medical devices, while Article 62 outlines the general requirements for conductiong clinical investigations.

For more information, refer to later phases of this report as well as AI fact sheets 3 and 2, which address validation and performance, respectively [82].

Security of an AI system

As part of Phase 2, an overall assessment should be conducted to determine whether available AI systems on the market meet acceptable security standards.

The Code of Conduct for information security and data protection (Normen) is a helpful tool for safeguarding information security and privacy in the health and care sector. It is technology-neutral and includes, among other things, dedicated articles on the use of artificial intelligence [83].

Evaluation of method and knowledge base

If a health technology assessment (HTA) has already been conducted for the AI system prior to procurement, it can provide valuable insights. Full HTAs offer a robust foundation for decision-making, as they are based on thorough scientific methodology and may include a comprehensive impact assessment. Given that AI remains a relatively new field, few full HTAs have been completed for AI systems so far.

Health Technology Assessment (HTA)

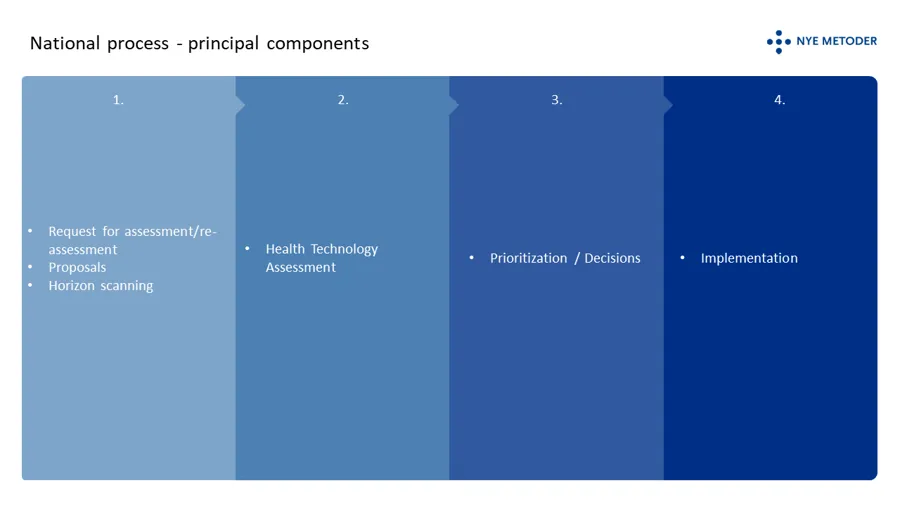

A Health Technology Assessment (HTA) is an interdisciplinary process that evaluates the efficacy, safety, ethics, organisational impact, cost-effectiveness, and budgetary consequences of introducing or phasing out a technology in the healthcare system [84][85].

The New Methods system (Nye metoder) is Norway's national framework for determining which treatment methods may be offered within the specialist health service. The Norwegian Institute of Public Health and the Norwegian Medical Products Agency perform HTAs on behalf of the Ordering Forum for New Methods (Bestillerforum for nye metoder) [86]. The Norwegian Radiation and Nuclear Safety Authority (DSA) assists in the HTA if the method uses radiation or may have an impact on the medical use of radiation. A national strategy is currently being developed to guide the further evolution of the New Methods system [87]. Additionally, a working group has been appointed to propose a framework, objectives, and criteria for assessing medical devices within the system.

Minimethod assessments

Many decisions are made at the local level, where mini-HTAs may be relevant. A mini-HTA is a simplified version of the national HTA and is tailored for use in both hospitals and municipalities. The NIPH is responsible for mini-HTAs and provides guidance as well as a national database of all completed and ongoing assessments on its website [88]. The database also includes contact persons for previously completed mini-HTAs.

According to the Radiation Protection Regulations, any new method that involves or affects the use of radiation must undergo a generic justification assessment. Method assessments that address radiation safety aspects are generally considered sufficient documentation in this context [89].

Lessons learnt from peer-reviewed publications

Peer-reviewed articles provide an independent description of the technology and offer documentation of its effectiveness, including evaluations of clinical utility. While the CE marking confirms that the product meets regulatory requirements for safety and performance, peer-reviewed literature can serve as an important complementary source of evidence.

Experiences from users of comparable AI systems

Systematic experience-sharing is valuable when new technologies are being introduced. Organisations should consider contacting others that have gained practical experience with similar AI systems. For example, user experiences related to interfaces and realised benefits are often best understood by consulting actual users in other healthcare institutions or countries. Suppliers may also be able to provide references to other organisations that have implemented their AI systems.

[81] MDCG 2020-1 Guidance on Clinical Evaluation (MDR) / Performance Evaluation (IVDR) of Medical Device Software Guidance on Clinical Evaluation (MDR) / Performance Evaluation (IVDR) of Medical Device Software (PDF)

[82] AI fact sheets will be published on Kunstig intelligens – Helsedirektoratet

[83] Security risks in artificial intelligence systems - what do we know and what can we do? - eHealth

[90] See in particular section 39 Eligibility: Forskrift om strålevern og bruk av stråling (strålevernforskriften) (lovdata.no)